Buying Experience and Rollout Strategy

The Meta Display glasses are the most affordable step toward ambient, wearable AI computing. Ambient computing complements the real world rather than replacing it, and that distinction matters because it’s a major accomplishment. At $799, they’re roughly 25% of the cost of Vision Pro.

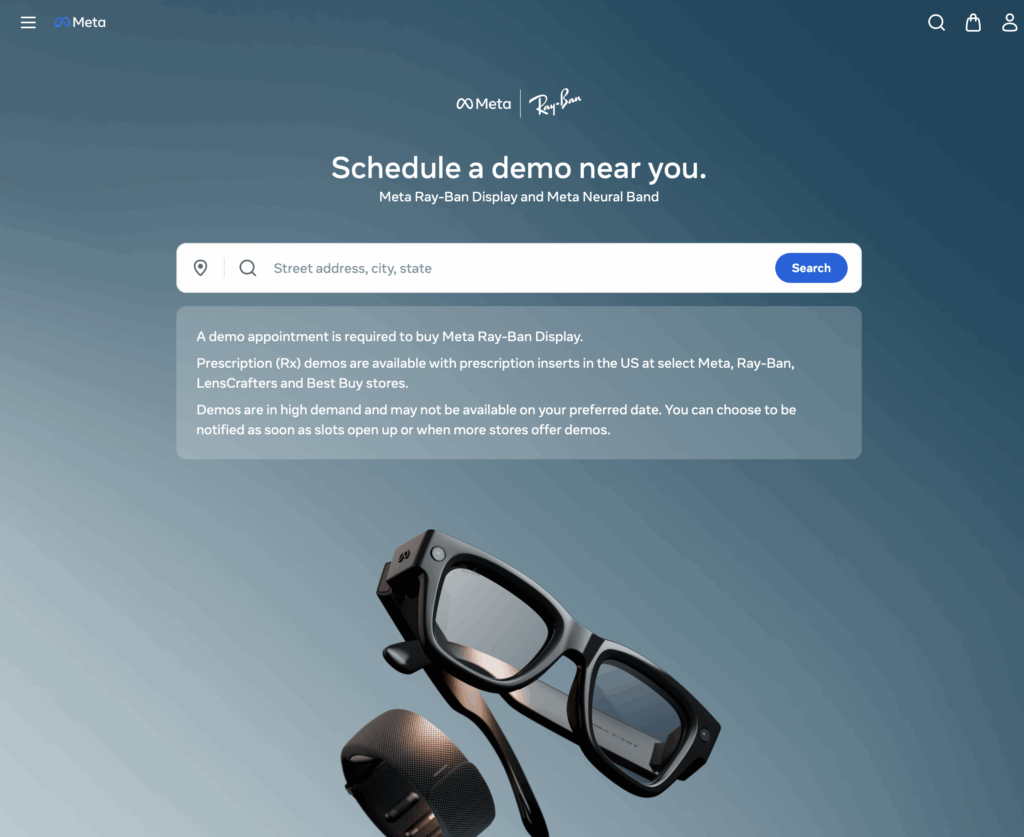

Meta’s sales model for its Display glasses reveals that users need to be fitted with both the glasses and the Neural Band. As stated on the first line of the Meta Display landing page, “A demo appointment is required to buy Meta Ray-Ban Display.”

The glasses are only available through in-person demos at select Best Buy, LensCrafters, Ray-Ban, Sunglass Hut, and soon Verizon store. This measured rollout indicates that Meta is prioritizing fit accuracy, message control, and early adopter quality over scale.

At Best Buy, the glasses are sold by Meta employees. It appears Meta hired and trained local Best Buy retail personnel, which underscores the degree of control the company wants to maintain over the rollout.

The rep explained that Meta chose a demo-first approach because of the sizing of the Neural Band, an crucial accessory for functionality. If the band is too small or large on the user’s wrist, gestures do not register properly and can become “highly exaggerated.” The decision to gate access through demos introduces friction but also serves to calibrate expectations, ensuring first impressions align with what the product can deliver on.

I expect by the end of the year you will be able to order a pair online.